The goal of this project was to restore the 3D position of a camera based only on 2D image sequence of video frames, containing a calibrated grid. This was necessary for a TV show production in what we would call now Augmented Reality.

The program accepts a video stream as input, detects the markers position, performs reverse 3D projection and outputs the result in a data file containing a timeline of 3D position of the camera. This file was then used in a 3D graphic application like Max or Maya for producing virtual 3D space. As a result, the 3D VR world and the live video were synchronised creating the AR illusion.

The following are the most interesting parts in the application:

- pattern detection in the frames of the video stream;

- "reverse projecting" - finding the point in 3D space from which the camera took the shot.

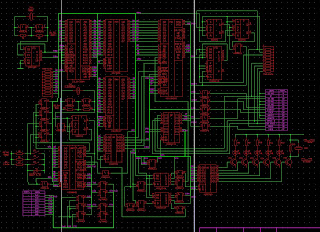

The application accepts as input an AVI file with frames containing the scene, actors and the special pattern (light blue lines on the back) like this:

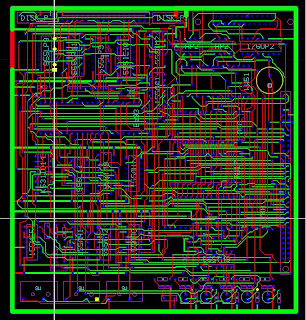

Pattern detection

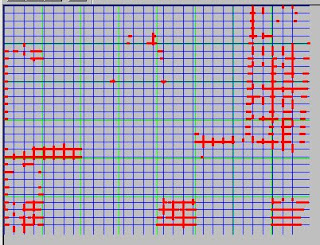

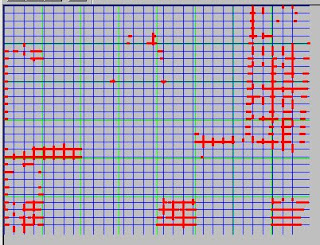

The frame is de-composed using blue Chrome key to separate the actors from the blue background. Then the algorithm detects the calibrated pattern traces in the image. The frame is scanned in the two directions, the pattern traces are marked based on colour gradient and the lines of the pattern are detected as "hypotheses". The debug output of this stage looks like this:

Restore 3D position

The second part of the process is to restore the camera 3D position (and FOV) from the 2D patterns. Algorithm takes the pattern "hypotheses" from the previous step and detects the scale, skew and rotation of teh calibrated grid. From this data it calculates the 3D point of the camera from which the image was shot.

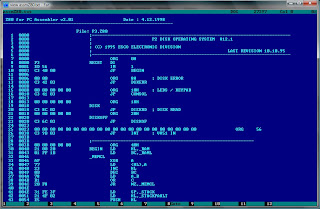

The application performs this calculations for the whole AVI sequence and form as a result the sequence of 3D camera points for export to 3D Max.

The application was solely developed by me and here is the GitHub with the source code.